DataOps Principle #14: Analytics is Manufacturing

“Let’s switch gears” We’ll take a break from talking about dataflows and turn attention back to Power BI datasets. In Part 22, I proposed using the Gherkin language to implement testing - thanks to version 4 of Pester. At the time I was using it for dataflows, but I have since realized I could use it to gain efficiencies while testing Power BI datasets.

DataOps Principle #14: Analytic pipelines are analogous to lean manufacturing lines. We believe a fundamental concept of DataOps is a focus on process-thinking aimed at achieving continuous efficiencies in the manufacture of analytic insight.

Areas of Improvement

After spending some time with testing Power BI datasets using a technique from Part 4, I identified some areas for improvement:

Multiple DAX test files - As the number of DAX test files grew, data analysts had to have more test files open in something like DAX Studio, then go through each file and run the query manually to make sure the tests passed in each file. There had to be a more efficient way.

Integrating different types of tests - While DAX-based tests helped test certain aspects of the datasets (like DAX measures, calculated columns, and some table contents), I wanted to merge other ways to test the datasets. This includes testing the schema and checking column values against regular expressions (you know I love regular expressions). If the tests could be integrated, then I could possibly track test results better to support monitoring for quality and performance.

Simplifying the testing process - As I have stated before in Part 21, Power BI’s largest user base is data analysts and not developers/computer scientists who are familiar with writing code that tests code. I wanted to make it as simple as possible to both create the tests, and run the tests locally. I also wanted to make sure the same method used to test locally could be leveraged in the pipeline during orchestration.

Making the Improvements

Pester Version 4 and its Gherkin language implementation seemed like a logical approach because I could abstract the technical work within the ‘.steps.ps1’ file from the .feature file which would contain tests that could be written in plain language by a data analyst. But there are a few challenges when running the same tests both on your local machine or against the Power BI Service (via a Continuous Integration pipeline):

Challenge #1 - Are we running these tests locally? When running tests locally we need to connect to the Power BI dataset differently than when the Power BI dataset is in the service. If local, Power BI creates a network port to connect to via the localhost. In the service, the connection requires an XMLA connection with a different syntax.

Challenge #2 - Which Power BI files are opened locally? When running tests locally we need to find which network port corresponds to each Power BI file that may be opened. We also need to make sure we run tests for only the opened Power BI files.

After some trial-and-error, I produced a solution thanks to (1) how the awesome gentleman at GuyInACube used PowerShell to identify the local network ports opened for Power BI Desktop files, and (2) by reviewing DAX Studio’s way to figure out which network port was associated with the Power BI file opened. This is a great case for why open source is awesome, and I’ll return the favor by making the Get-PowerBIReportsOpenedLocally module open source as well.

With those challenges overcome, I could build a potential framework to the three areas of improvement. Based on a sample project I have uploaded to GitHub here is what it looks like:

The Testing Structure

Building and running tests are based on the Behavior Drive Development (BDD) concept. Pester Version 4’s implementation of the Gherkin language facilitates BDD testing by defining tests through written narratives and acceptance criteria.

Building tests are based on two files:

.feature file

Within this project there are two sample .feature files:

SampleModelSchema.feature

This feature file shows how you can test the schema and content of the Power BI dataset. The following is an example implementation:

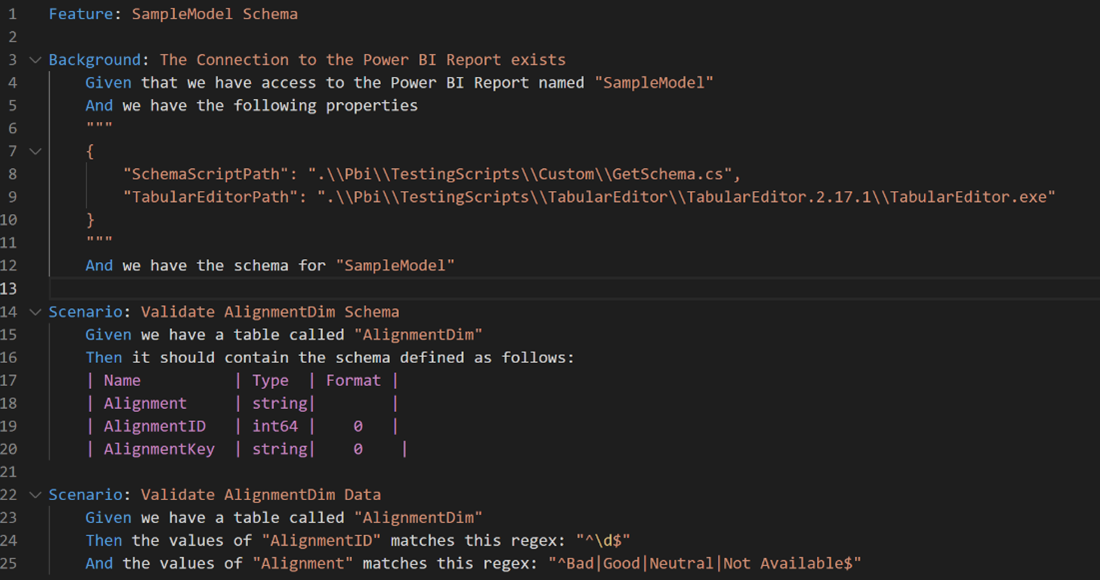

Figure 1 - Example of SampleModelSchema.feature file

Each sentence executes a test, which for the example above does the following:

- Line 1: Defines the type of test you are performing.

- Lines 2-12: The Background section verifies the Power BI report exists, you have access to the files (in this case the wonderful Tabular Editor) to get the schema and verify you can retrieve it.

- Lines 14-20: This Scenario is a set of steps to verify the ‘AlignmentDim’ table exists and the schema defined is correct.

- Lines 22-25: This Scenario is a set of steps that verifies the ‘AlignmentDim’ table has certain columns that match the regular expressions defined.

SampleModelDAX.feature

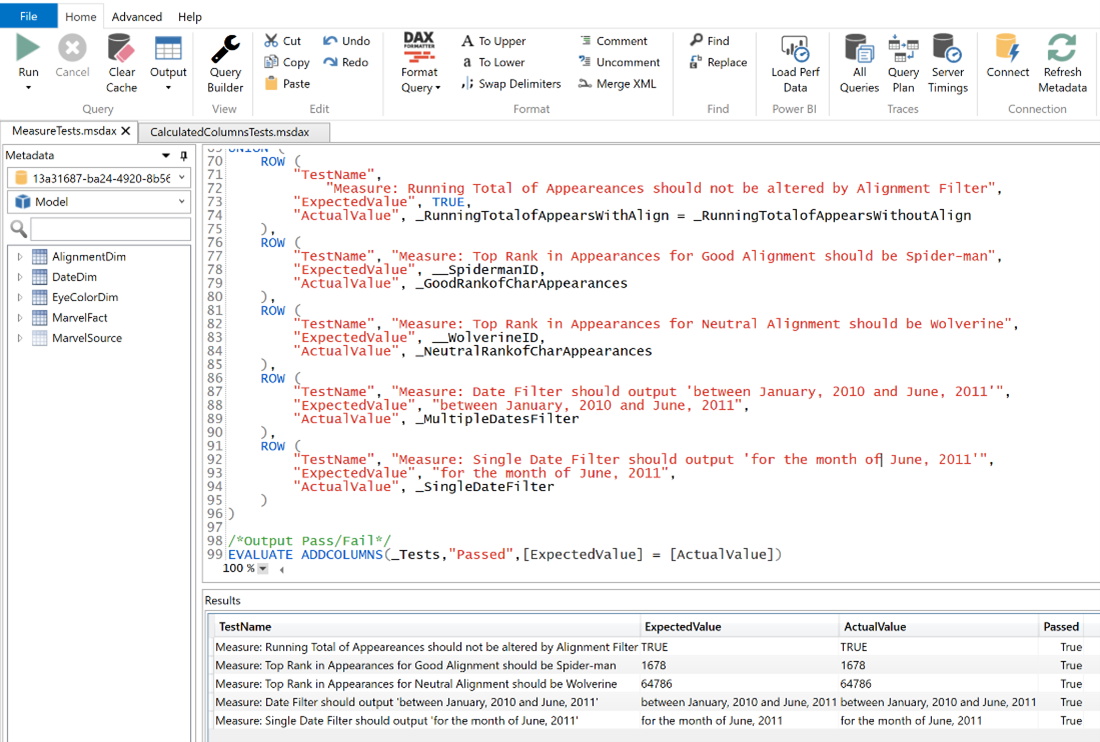

This feature file shows how you can test a Power BI dataset using DAX. All tests should result in an output that is consistent so that the automated tests can verify the tests pass. Fortunately, with DAX, we can create that schema. The example below provides the output of a set of test cases and follows a simple format:

Figure 2 - Example of DAX testing schema

Test Name- Description of the test prefixed by MEASURE, CALCULATED COLUMN, or TABLE to indicate what part of Power BI model is being tested.

Expected Value - What the test should result in. This should be a hardcoded value or function evaluated to a Boolean.

Actual Value - The result of the test under the current dataset.

Test Passed - True if the expected value matches the actual value. Otherwise, the result is false.

By leveraging a consistent schema to build tests using DAX, we can automate the testing of multiple DAX files. In this template, we have 3 files (CalculatedColumnsTests, MeasureTests, and TableTests). Therefore, the feature file to run multiple DAX test files is shown in the example below.

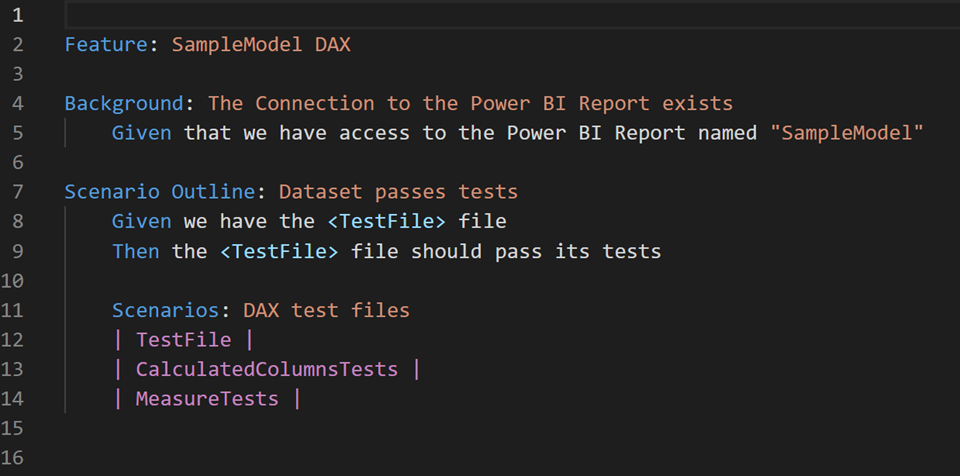

Figure 3 - Example of SampleModelDAX.feature file

Each sentence executes a test, which for the example above does the following:

- Line 1: Defines the type of test you are performing.

- Lines 4-5: Verifies that Power BI reports exists.

- Lines 7-9: Using the Scenario Outline we can create a loop to run each DAX test file.

- Lines 11-14: With the Scenario Outline defined we can just add the name of the DAX file to the table containing one column called “TestFile”. Then each file will be run (DAX query) against the Power BI dataset and the test will verify the expected values and actual values match.

.steps.ps1 file

Each sentence in the feature file is backed by a “.steps.ps1” with the same name as the feature file.

Since we want to take advantage of the same PowerShell code to run similar schema and DAX tests, all “.steps.ps1” files reference the file “Test-Support.steps.ps1”.

The Test-Support.steps.ps1 file supports the following test cases:

Background Tests

Given ‘that we have access to the Power BI Report named “{PBIFile}”’

Verifies the parameter {PBIFile} exists as a .pbix file within a subfolder under the /Pbi folder.

Schema Tests

And “we have the following properties”

Accepts a json file that lists the location of Tabular Editor and the GetSchema.cs file. This merely checks to see if those files exist so we can conduct further tests.

And “we have the schema for {TableName}”

Accepts the {TableName} parameter and uses TabularEditor and the GetSchema.cs file to pull the schema information for the table. If the test case can get this information, it passes.

Then “it should {Contain or Match} the schema defined as follows:”

After the prior schema test this test accepts a table of information with the columns Name, Type, and Format such as:

| Name | Type | Format |

| Alignment | string| |

- Name: This is the name of the column.

- Type: This is the type of the column (e.g., string, number, boolean).

- Format: This is the format of the column. You can leave this blank if the format does not need to be tested.

This test accepts a parameter {Contain or Match}. If the parameter entered is ‘Contain’ then this test will make sure each column exists and matches the type and format. If the parameter entered is ‘Match’ then this test will make sure the table has all the columns defined in the test, that each column exists, and that each column matches the type and format. The ‘Match’ value is strict and makes sure no new columns exist in the dataset compared to the defined table in the feature file.

Regex Tests

Given ‘we have a table called “{TableName}”’

Accepts the {TableName} parameter and makes sure table exists in the dataset.

And ‘the values of “{ColumnName}” matches this regex: “{Regex}”’

After the prior table test, this function accepts the {ColumnName} parameter and {Regex} parameter. This verifies that the column in the table passes the regular expression. The Regular Expression format follows the .Net Regular Expressions format.

DAX Tests

Given ‘we have the {TestFile} file’

Verifies the parameter {TestFile} exists as a .msdax or .dax file within the subfolder defined by the PBIFile in the Background tests.

Then {TestFile} file should pass its tests’

This queries the dataset defined by the PBIFile in the Background tests using the DAX file defined by the parameter {TestFile}. If the query returns an acceptable schema and all the expected values equal the actual values in the query results, the test will pass.

Running Tests

This project has a script called “Run-PBITests.ps1” that exists at the root of the project.

This script allows you to run the tests you created for each Power BI file that is open. Here are the steps:

- Within Visual Studio Code, open your project folder.

- Then within Visual Studio Code click the terminal menu option and select "New Terminal".

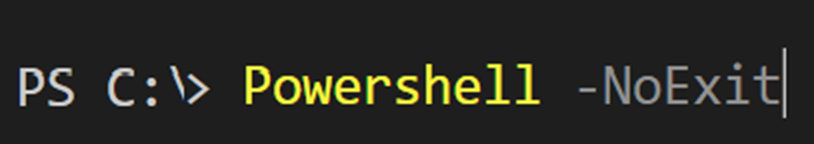

- From the terminal enter the command "Powershell -NoExit"

This commands makes sure we are running classic PowerShell and not PowerShell Core. The command Invoke-AsCmd has not been ported to work with PowerShell Core (as of August 2022) by Microsoft.

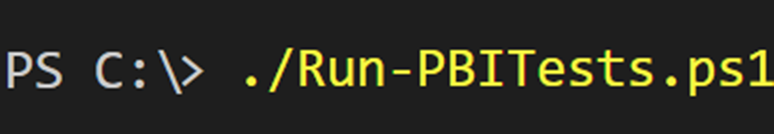

This commands makes sure we are running classic PowerShell and not PowerShell Core. The command Invoke-AsCmd has not been ported to work with PowerShell Core (as of August 2022) by Microsoft. - Then from the terminal enter the command "./Run-PBITests.ps1"

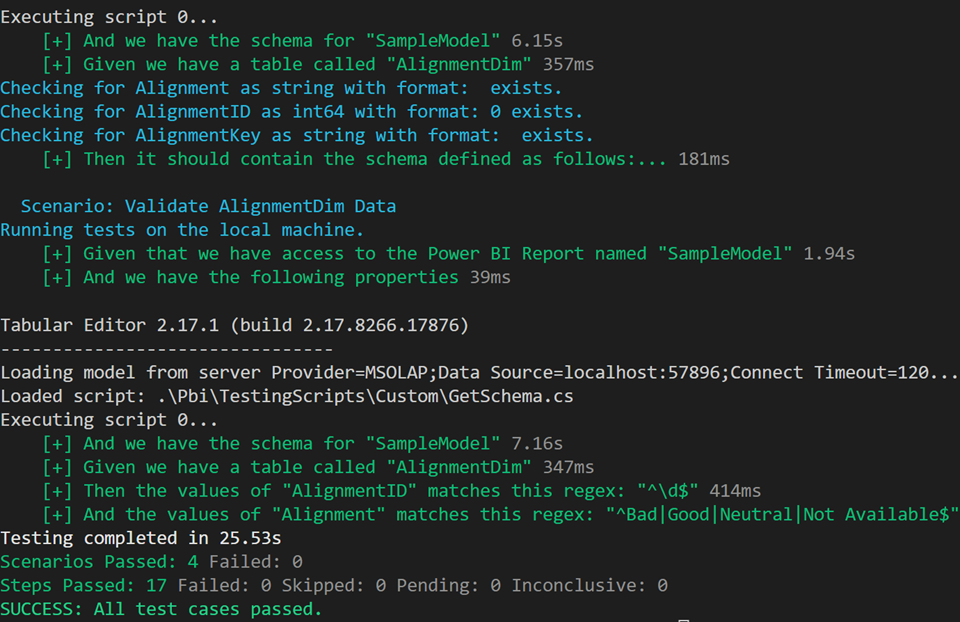

- If the test cases pass, then you will see in the terminal a confirmation of success with a message "SUCCESS: All test cases passed."

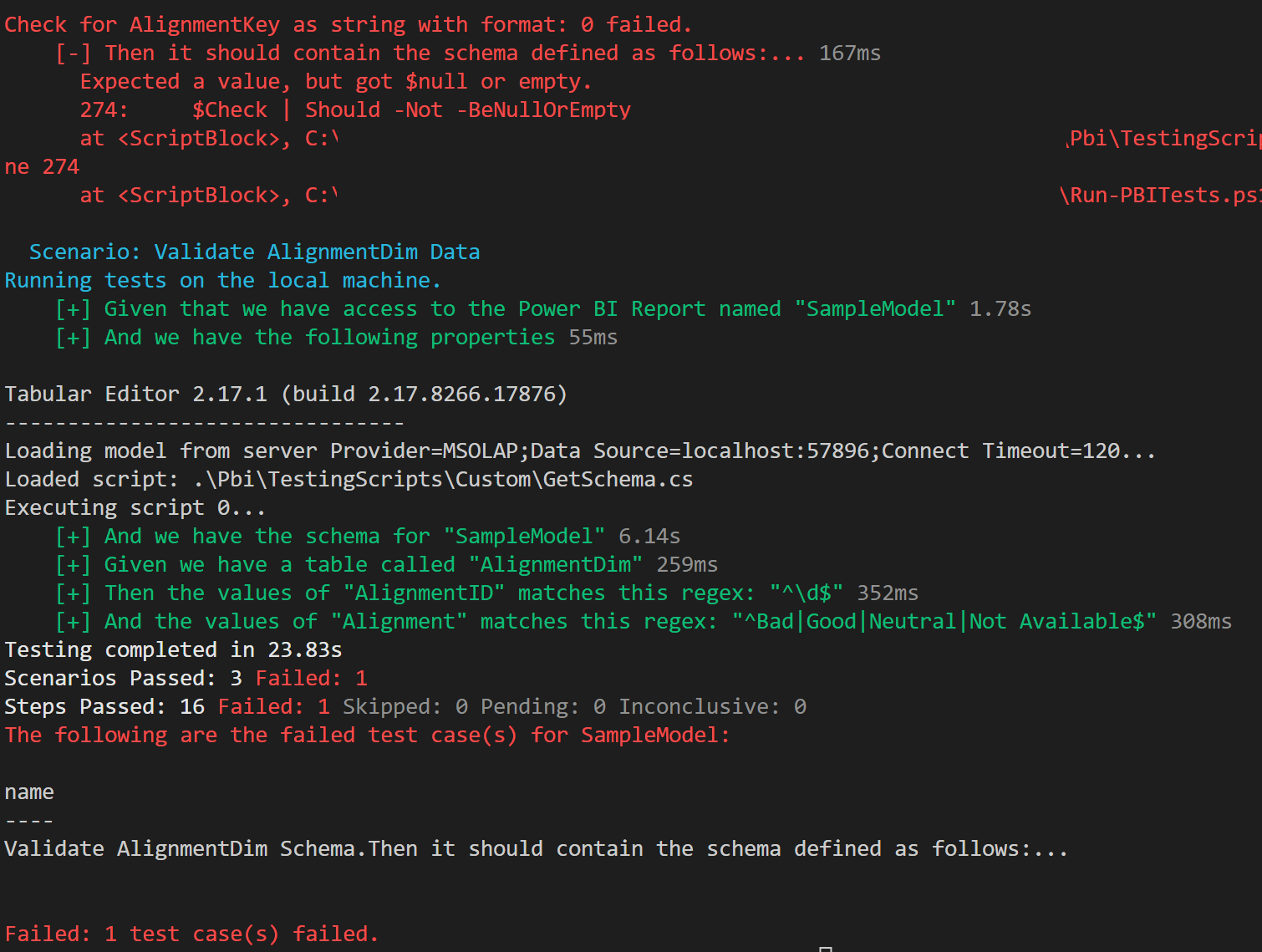

- If a test fails, then you will see in the terminal which test cases failed (see example).

Orchestration

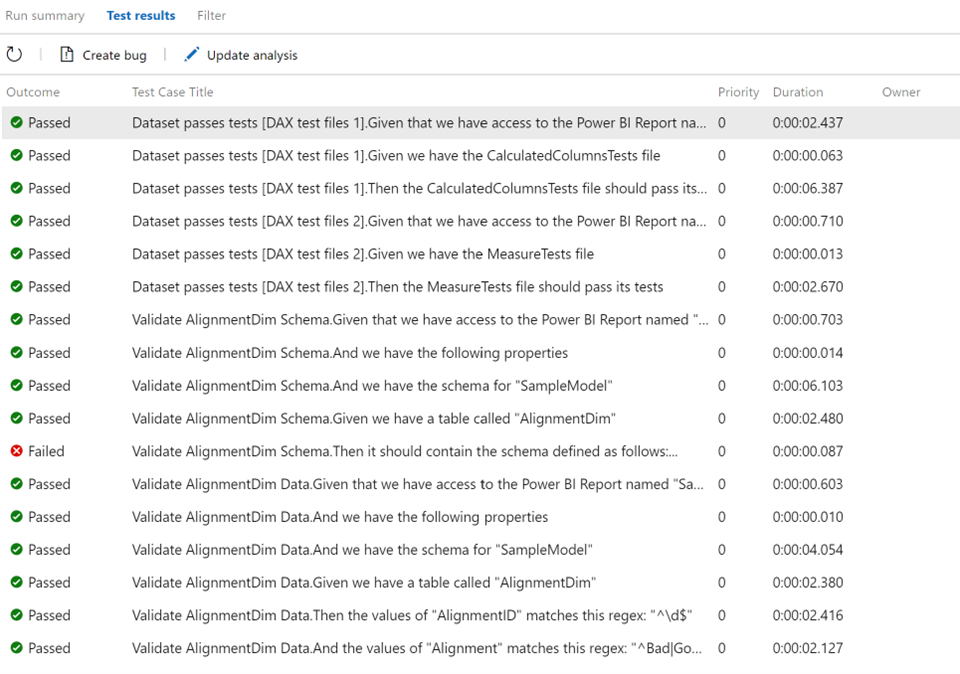

One of my favorite parts about Pester is that it will allow you to produce the test results in the NUnit format. Therefore, now when the testing completes in a CI pipeline it can publish those results to Azure Test Plans. Thus, we have a more centralized way to monitor and track testing.

Figure 4- Example of Publish Test Results in Azure DevOps

Sharing A Template Project

I’ve shared instructions on how to setup a template project, cloning that project locally, and running tests on GitHub. My hope is that you can leverage these samples to start to build a suite of datasets backed by source control, tested using an easy-to-use framework, and automated through orchestration.