DataOps Principle #15: Quality is Paramount

Yep, I did it to myself… I thought I had fixed an issue in the data model, but I ended up creating a new one. A client had called about a calculation issue, and I had opened Power Query to investigate. I was looking at several steps in Power Query and to help with my investigation I applied a filter step to Table X. As I navigate to Tables Y and Z, I find the issue, apply a fix to Table Z and close Power Query. So, I forgot to remove the filter on Table X and I published the dataset, only to create another issue that I learned about hours later. Thankfully we had instituted best practices in version control so the dataset didn’t go to production with the new error, but I was frustrated that the mistake was so easy to make. Also, I found it vexing that there was no safety net for either me or my colleagues to keep us from falling flat on our faces with these kind of easy mistakes. Our team needed a safety net.

Have you applied a filter on a table in Power Query and forget to remove it later?

Have you applied a filter on a table in Power Query and forget to remove it later?

DataOps Principle #15 - Analytic pipelines should be built with a foundation capable of automated detection of abnormalities and security issues in code, configuration, and data, and should provide continuous feedback to operators for error avoidance.

The biggest challenge to applying this principle within Power BI is the lack of concrete testing frameworks. Yes, there are great third-party tools (like Dax Studio) to help conduct tests, but not necessarily a great framework to instill good testing of Power BI. How do we consistently empower Power BI developers to build sound models, build tests easily, and establish a basis of tests to protect the development team from regression errors.

Best Practices

I have learned if you follow these best practices, you won’t eliminate all errors, but you sure will reduce them.

1) Have a Test Dataset – In order to test effectively you need to have a known underlying dataset to validate your Power BI report. For example, if you are importing a SQL database you don’t adjust the query to be a window of time in the past or clone the data. The goal is to have a static set of data to work with, so the only variables that would change during a test is the code you or your team has changed in Power BI.

2) Test The Tables – As of August 2021 you can’t test each line of M code in Power Query that is generated when you build your tables. However, if you follow the prior best practice you can test to make sure the composition of the tables created by your code are well-formed. For example, you could test for:

- Number of rows in a fact table.

- Number of rows in a dimension.

- The presence of a value in a column that shouldn't be there.

- The existence of blank columns.

- The values in a custom column are correct.

So, in the case where you apply a filter to troubleshoot your Power BI file and forget to remove it, a properly defined test case would indicate the issue.

3) Test The DAX – If you follow the first best practice you should be able to test that a DAX measure or calculated column has the appropriate result. For example, let’s say you have a DAX measure “IF(SUM(‘TableX’[ColumnY])<0,”Condition 1”,”Condition 2”)”. To test properly you should create conditions to test when:

- The summation is > 0

- The summation is = 0

- The summation is \< 0

- The summation is blank

These types of tests are not just for numeric-based measures/columns. You can also test for potential issues with time zones, when source columns are blank, etc.

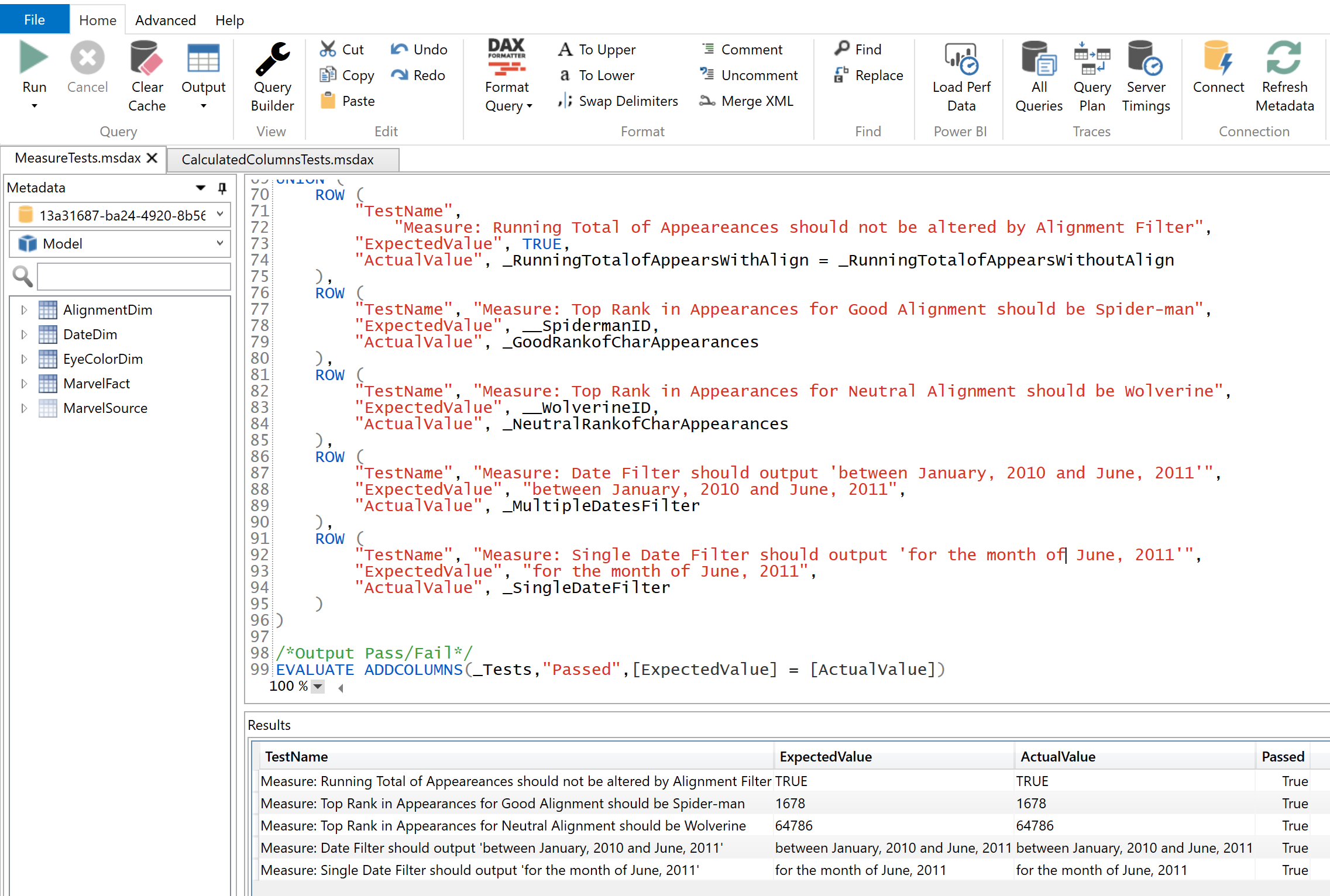

4) Have a Test Schema – All tests should result in an output that is consistent so that the team can understand the results and find failed tests quickly. Fortunately, with DAX, we can create that schema. Figure 2 is an example of the output of a set of test cases and follows a simple format:

- Test Name- Description of the test prefixed by MEASURE, CALCULATED COLUMN, or TABLE to indicate what part of Power BI model is being tested.

- Expected Value - What the test should result in. This should be a hardcoded value or function evaluated to a Boolean.

- Actual Value - The result of the test under the current dataset.

- Test Passed - True if the expected value matches the actual value. Otherwise, the result is false.

5) Maintain Your Tests – To paraphrase Edsger Dijkstra, a famous computer scientist, testing will show the presence of flaws, not the absence of flaws. Therefore, as you encounter more issues (and you will) make sure to update the test dataset and the test cases to check for the presence of the issues. The goal is to never encounter the same issue twice in production!

6) Version The Tests – Just as I recommended in Part 3 that you should use version control with Power BI files, you should also include the tests when you check-in/commit your Power BI file. That way your colleagues can run the same tests to check for errors.

If you’re interested, I’ve shared an example of the tests along with a Power BI model on GitHub. I’ve also included instructions on how to run the tests using DAX Studio (a great product!).

In my next post for the series, I will combine the best practices in version control and the sample test cases and describe how to automate the testing and deployment of a Power BI file with Azure DevOps and PowerShell.