Make It Reproducible… for Gen1 Dataflows

While I look forward to all the new capabilities Gen2 dataflows offer, it may be a while before they are available to the wider audiences I work with. It took nearly two years for Gen1 dataflows to come to the Government Community Cloud (GCC) if my aging memory serves me right. Furthermore, even if the pricing becomes cost-competitive, not every client will be prepared to factor the cost of purchasing Fabric into their current solutions.

Fortunately, Gen 1 dataflows still represent a viable production option, particularly for those interested in implementing a Medallion architecture within the Power BI Service. Roughly a year ago, I offered a Power BI-based solution for the version control, testing, and orchestration of Gen1 dataflows using the Bring Your Own Storage (BYOS) feature. However, BYOS is not always an option (GCC users definitely don’t have this option as of Fall 2023).

To improve cycle times and reduce errors in environments where the BYOS solution was not feasible, I explored some options. Thankfully, I had a version control tool in Azure DevOps, so I got to work developing a strategy for backing up the dataflows my teams were working on.

The Polling Method

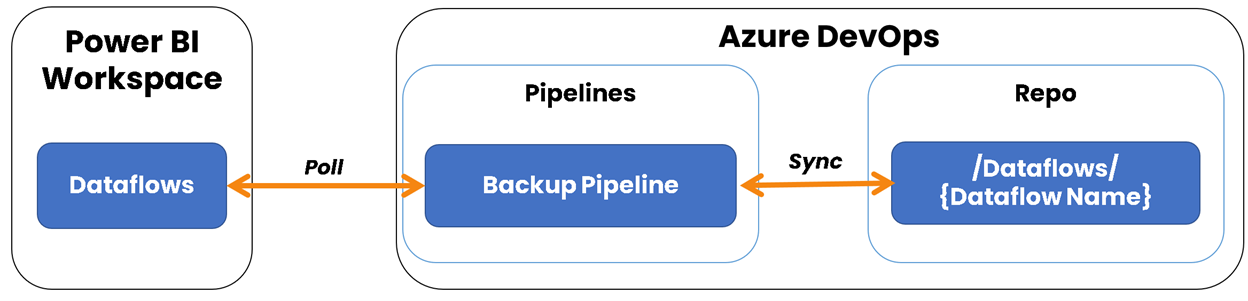

Without BYOS, I knew that capturing every single change to the dataflow was impossible. However, I could poll for changes at regular intervals and commit those changes to an Azure Repo (see Figure 1). That way, if I needed to recover a past version or a deleted dataflow, my teams would not experience significant setbacks.

Figure 1 - Illustrates the high-level polling process and components used to backup dataflows to Git.

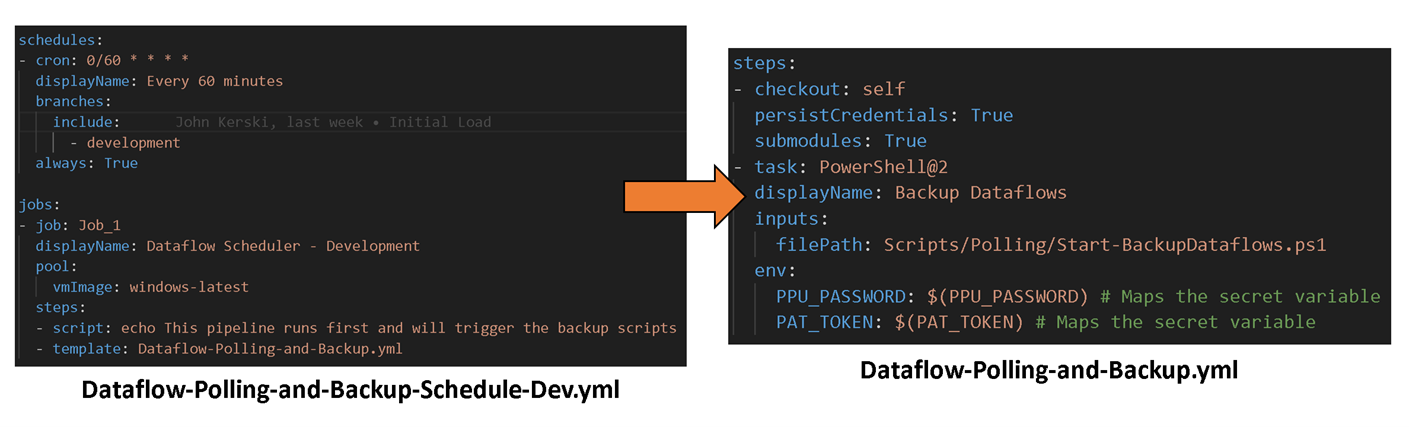

This polling process is driven by two YAML files:

1) Dataflow-Polling-and-Backup-Schedule-Dev - This file runs on a scheduled interval and kicks off the second YAML file. The setup will prevent an infinite loop from occurring in future iterations of this solution when we want a branch update to trigger a continuous integration (CI) process (hint: it involves my favorite topic).

2) Dataflow-Polling-and-Backup - This file runs the Start-DataflowBackup.ps1 process, which includes:

Loading and installing the appropriate PowerShell modules.

Logging into the Power BI Service using an account (see security notes).

Retrieving the list of Power BI dataflows.

Checking if the Azure Repo (I also use the term repo and repository synonymously with Azure Repo) has the dataflow. If not, it adds the dataflow to the repository. If the dataflow does exist, the script inspects the “modifiedTime” properties to review timestamps. If they do not match, we commit a new version to the repository.

Figure 2 - Illustrates how two YAML files implement the Polling Method.

Security Notes

The polling method is dependent on two major security components:

1) A Premium Per User (PPU) account that can log in to the Power BI service and access the workspace housing the dataflows.

2) A Personal Access Token (PAT) that can access the repository storing the dataflows.

Restoring a dataflow

To revert to a prior dataflow, download the JSON file from the repository and import it into your Power BI workspace. If the dataflow already exists, it will append a number to the end of the new one. You’ll have to delete the old one and relink any dependencies…a pain, but at least you have a version to restore. :)

Try It for Yourself

To illustrate the polling method in action, I’ve provided an installation script on GitHub. This script simplifies the setup by creating a Power BI workspace and Azure DevOps projects and while configuring the appropriate settings to facilitate the process.

If you are going to Community Summit North America, stop by my session on October 18th, where I will go in-depth on dataflow version control and testing. For even more dataflow content, stay tuned for my next article, where I will cover testing dataflows using a technique Luke Barker and I came up with.

This article was edited by my colleague and senior technical writer, Kiley Williams Garrett.