Weaving DataOps into Microsoft Fabric – Invoke-DQVTesting and Orchestration

In my last article, I discussed how to automate testing on your local machine using the Invoke-DQVTesting module. While this is helpful, Invoke-DQVTesting can also automate testing in an Azure DevOps pipeline providing continuous integration and continuous deployment for your teams. This is critical to meeting the principle of Orchestrate and having your teams spend less time doing manual tasks.

DataOps Orchestrate: The beginning-to-end orchestration of data, tools, code, environments, and the analytic teams work is a key driver of analytic success.

PBIP Deployment & DAX Query View Testing Pattern

So how do you accomplish this? Well, the consultant answer would be it depends, but I’d rather provide a scenario that I’ve seen before. I’ll call this the “PBIP Deployment & DAX Query View Testing Pattern.”

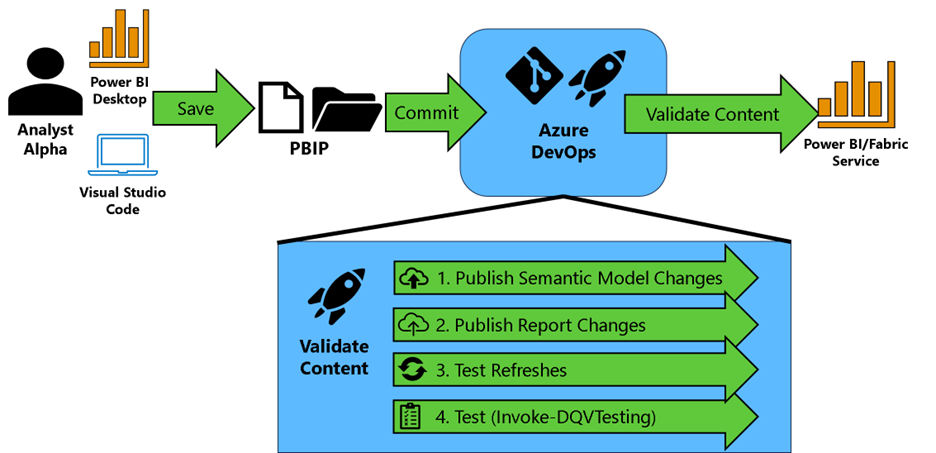

Figure 1 – PBIP Deployment & DAX Query View Testing Pattern

Figure 1 – PBIP Deployment & DAX Query View Testing Pattern

In the pattern depicted in Figure 1, your team saves their Power BI work in the PBIP extension format and commits those changes to Azure DevOps.

Then an Azure Pipeline is triggered to validate the content of your Power BI semantic models and reports by performing the following:

The semantic model changes are identified using the “git diff” command. Semantic models that are changed are published to a premium-backed workspace using Rui Romano's Fabric-PBIP script. The question now is, which workspace do you deploy it to? I typically promote to a Build workspace first, which provides an area to validate the content of the semantic model before promoting to a development workspace that is shared by others on the team. This reduces the chances that a team member introduces an error in the Development workspace that could hinder the work being done by others in that workspace.

With the semantic models published to a workspace, the report changes are identified using the “git diff” command. Report changes are evaluated for their “definition.pbir” configuration. If the byConnection property is null (meaning the report is not a thin report), the script identifies the local semantic model (example in Figure 2). If the byConnection is not null, we assume the report is a thin report and configured appropriately. Each report that has been updated is then published in the same workspace.

Figure 2 - Example of. pbir definition file

Figure 2 - Example of. pbir definition fileFor the semantic models published in step 1, the script then validates the functionality of the semantic model through a synchronous refresh using Invoke-SemanticModelRefresh. Using the native v1.0 API would be problematic because it is asynchronous, meaning if you issue a refresh you only know that the semantic model refresh has kicked off, but not if it was successful. To make it synchronous, I’ve written a module that will issue an enhanced refresh request to get a request identifier (a GUID). This request identifier can then be passed as parameter to the Get Refresh Execution Details endpoint to check on that specific request’s status and find out whether or not the refresh has completed successfully.

If the refresh is successful, we move to step 4. Note: The first time a new semantic is placed in the workspace, the refresh will fail. You have to “prime” the pipeline and set the data source credentials manually. As of April 2024, this is not fully automatable and the Fabric team at Microsoft has written about.For each semantic model, Invoke-DQVTesting is called to run the DAX Queries that follow the DAX Query View Testing Pattern. Results are then logged to the Azure DevOps pipeline (Figure 3). Any failed test will fail the pipeline.

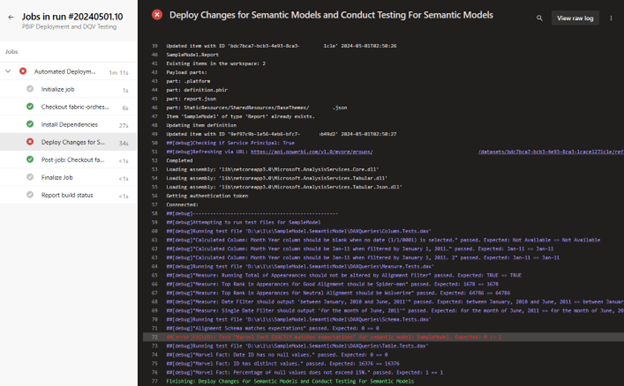

Figure 3 - Example of test results logged by Invoke-DQVTesting

Figure 3 - Example of test results logged by Invoke-DQVTesting

In practice

Do you want to try it out for yourself? I’ve written step-by-step instructions on implementing the pattern with Azure DevOps at this link. Please pay close attention to the prerequisites so your workspace and tenant settings can accommodate this pattern. I will point out that I’ve tried this pattern with success on Fabric workspaces and Premium-Per-User workspaces in the Commercial tenant (sorry GCC, your time will hopefully come).

As always, let me know your thoughts on LinkedIn or Twitter/X on the module and ways to improve it. In my next article we’ll talk about how keep track of these tests results with the help of OneLake.