Weaving DataOps into Microsoft Fabric - Automating DAX Query View Testing Pattern with Azure DevOps

In my last article, I covered implementing the DAX Query View Testing Pattern to establish a standardized schema and testing approaches for semantic models in Power BI Desktop. This pattern facilitates sharing tests directly within the application. If you’re interested in a demonstration, check out my recent YouTube video on the subject.

Now, as promised, let me discuss automating testing (i.e., Continuous Integration) using PBIP and Git Integration.

DataOps Principle Orchestrate: The beginning-to-end orchestration of data, tools, code, environments, and the analytic team’s work is a key driver of analytic success.

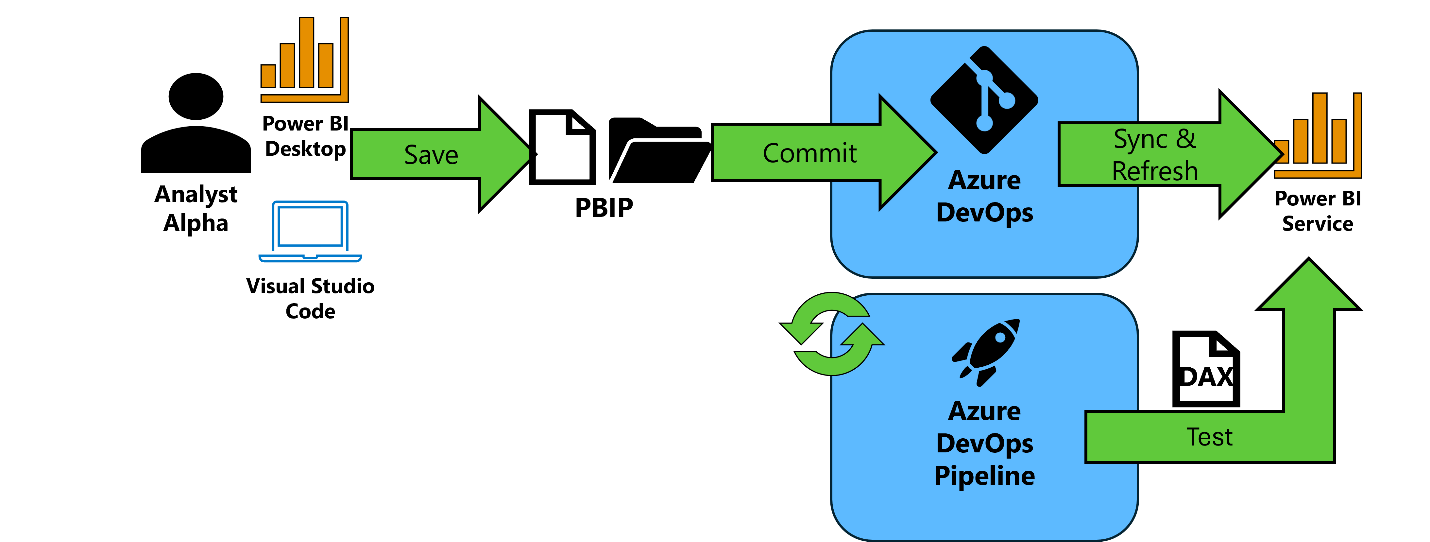

Orchestration through Continuous Integration and Continuous Deployment (CI/CD) is essential for delivering analytics to customers swiftly while mitigating the risks of errors. Figure 1 illustrates the orchestration of automated testing.

Figure 1 – High-level diagram of automated testing with PBIP, Git Integration, and DAX Query View Testing Pattern

Figure 1 – High-level diagram of automated testing with PBIP, Git Integration, and DAX Query View Testing Pattern

High-Level Process

In the process depicted in Figure 1, your team saves their Power BI work in the PBIP extension format and commits those changes to Azure DevOps.

Then, you or your team sync with the workspace and refresh the semantic models. For this article, I am assuming either manual integration or the use of Rui Romano’s code to deploy a PBIP file to a workspace, with semantic models refreshed appropriately. With these criteria met, you can execute the tests.

Automated Testing

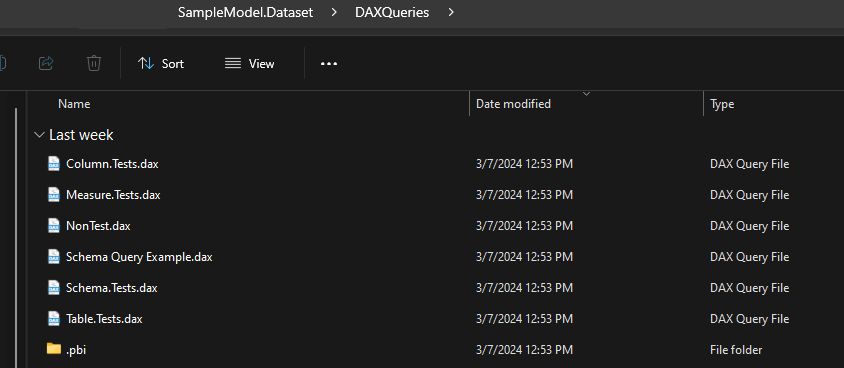

With the PBIP format, each tab in your DAX Query View exists as a separate DAX file in the “.Datasets/DAXQueries” folder (as demonstrated in Figure 2).

Figure 2 - Example of DAX Query Tests in DAXQueries folder for the PBIP format

You can then leverage the Fabric application programming interfaces (APIs) and XMLA to execute each test query against the semantic model in the service. These tests can be executed through a pipeline, or they can run a schedule to verify all tests pass several times a day. But how?

Template

Well, I have a template for that on fabric-dataops-patterns. To get started, you need:

An Azure DevOps project with at least Project or Build Administrator rights

A premium-back capacity workspace connected to the repository in your Azure DevOps project. Instructions are provided here. I have tested this template in a Premium Per User and Fabric capacity.

A Power BI tenant with XMLA Read/Write Enabled.

A service principal or account (i.e., a username and password) with a Premium Per User license. If you are using a service principal, you will need to make sure the Power BI tenant allows service principals to use the Fabric APIs. The service principal or account will need at least the Member role in the workspace.

With these requirements met, you can follow these instructions to create the variable group, set up the pipeline, and copy the sample YAML file to get started.

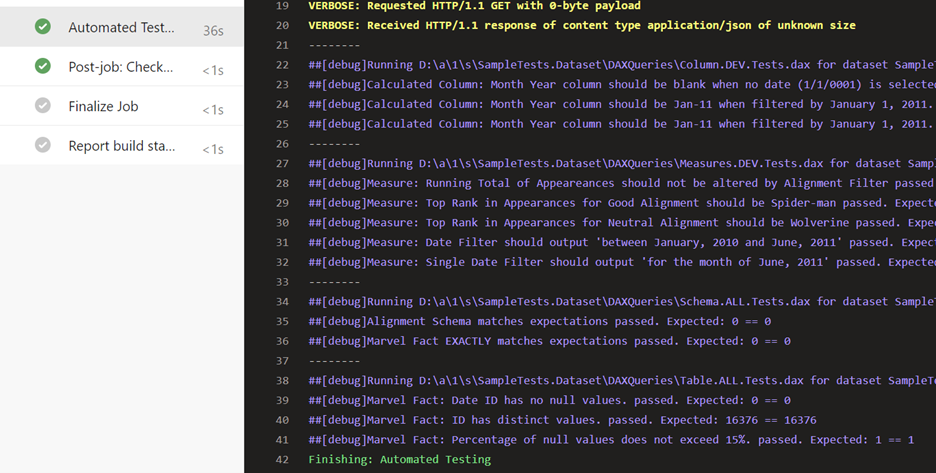

If you follow the steps correctly, any semantic models in the workspace that also exist in the repository and have test files will be queried to determine pass or fail statuses (Figure 3).

Figure 3 - Example of DAX tests conducted through the build agent in Azure DevOps

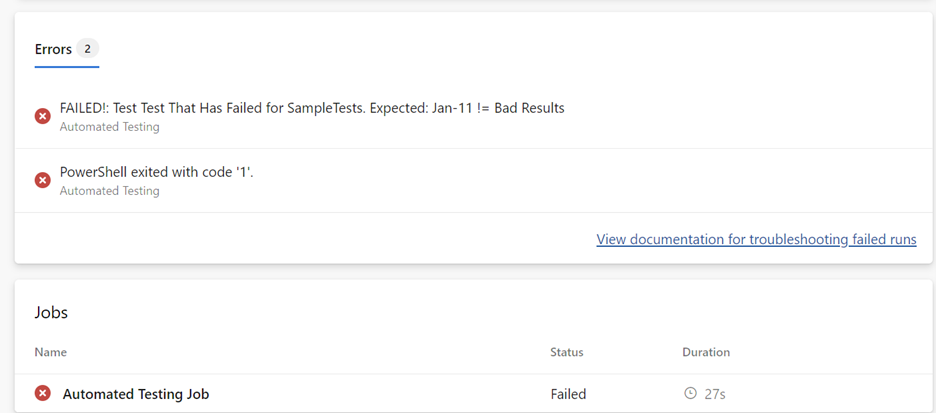

Any failed tests will be logged by the code as an error and the pipeline will fail (see Figure 4).

Figure 4 - Example of failed test identified with automated testing

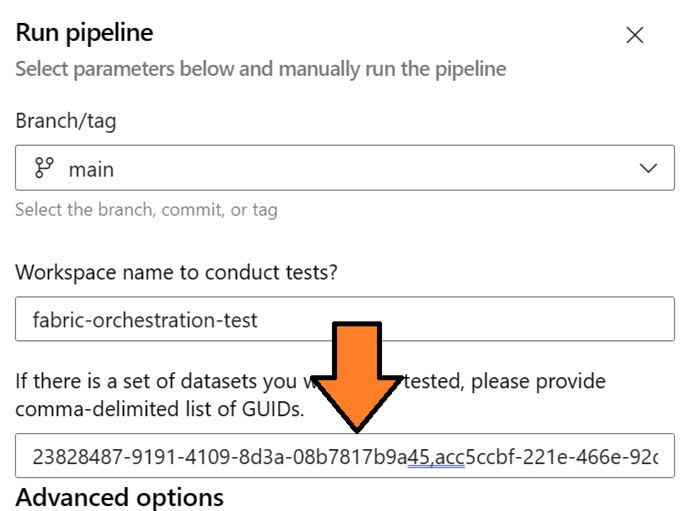

To run tests for select semantic models, pass the semantic model IDs as a comma-delimited string into the pipeline. The pipeline will only conduct tests for those semantic models (see Figure 5).

Figure 5 - Example of automating tests for a select number of semantic models

This is especially helpful if you are looking to take this pattern and apply this testing pipeline as a template.

Monitoring

It’s essential to monitor the Azure DevOps pipeline for any failures. I’ve also written about some best practices for setting that up in this article.

Next Steps

I hope you find this helpful in establishing a consistent pattern for testing your semantic models and instituting a repeatable process for automating testing. I’d like to thank Rui Romano for the code provided on the Analysis Service Git Repository as it helped accelerate my team’s work to automate testing with the Fabric APIs.

In future articles, I will cover various aspects of automated testing and how to proactively react to failures.

As always, let me know what you think on LinkedIn or Twitter/X.

This article was edited by my colleague and senior technical writer, Kiley Williams Garrett.

Git Logo provided by Git - Logo Downloads (git-scm.com)