The Data Journey: Don’t Assume What Worked Last Week Will Work Today

Microsoft technologies and application programming interfaces (APIs) are rapidly evolving, introducing new beneficial features and improvements. However, changes to Power BI or Graph APIs can also affect backend data operations, including the operation of custom connectors like the ones I’ve authored for the Power BI REST API and Planner API. Over the past year, I’ve seen production systems rely on custom connectors, so we can’t assume what worked before an Microsoft update will work after.

The principles of DataOps emphasize the importance of evaluating the data’s journey through the assembly line to identify potential points of failure. A proactive approach ensures that you can respond quickly to changes in the broader IT ecosystem without disrupting operations for users and customers. With many of my production environments using custom connectors, I wanted to set up continuous testing to identify potential failures. But there were a few challenges:

Decoupling test environment from testing - Many connectors require specific details about my tenant and workspaces that I would not want to publish in a public GitHub repository. To ensure the custom connector code and testing code were accessible to external users while safeguarding sensitive data, I needed a way to easily separate the test configurations and remove the globally universal unique identifiers (GUID) only available through my tenant.

Automating Authentication - Several of the custom connectors I wrote, both independently as well as in collaboration with Luke Barker, use Azure Active Directory (AD) Authorization. To facilitate testing, I needed to use a service account. However, the Power Query Software Development Kit (SDK) for Virtual Studio (VS) Code, semi-obfuscated this process. The SDK stored credentials in a way that didn’t expose each step in the terminal (probably for good security reasons).

Deploying – In cases where the custom connector passed automated tests, I needed to automatically remove any testing variables associated with my tenant. This measure would safeguard variables (e.g., passwords, secrets, tenant IDs) from being posted to GitHub.

Standardizing Testing - I also wanted to ensure I could run the same tests locally with a simple command that incorporated my test configuration using the same command for running automated tests in the build pipeline. That way, whether I ran a test locally, the build pipeline ran it, or another contributor ran it in their tenant, the execution steps would be the same.

Solution

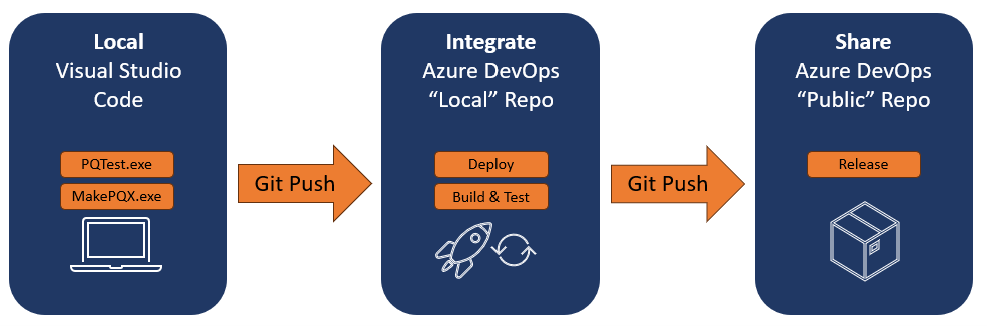

After reading the Microsoft documentation and reading Ben Gribaudo’s article on the Power Query SDK, I started to experiment with tackling each one of these challenges. As shown in Figure 1, I ended up having two repositories:

1) An “internal” repo on Azure DevOps (it could have been a private repo on GitHub, but it’s hard to break habits) to store the code along with my test configuration file.

2) A “public” repo to keep the code open-sourced and releases publicly available. This allowed me to maintain my test configuration in source control while excluding this component during the deployment pipeline in Azure DevOps. With the help of Secret Scanning in Azure DevOps, it also provided an extra layer of protection against accidentally committing sensitive secrets or passwords to the public source control.

Figure 1 - Design for testing, deploying, and sharing custom power query connectors.

Within this high-level design, here is how I tackled each challenge:

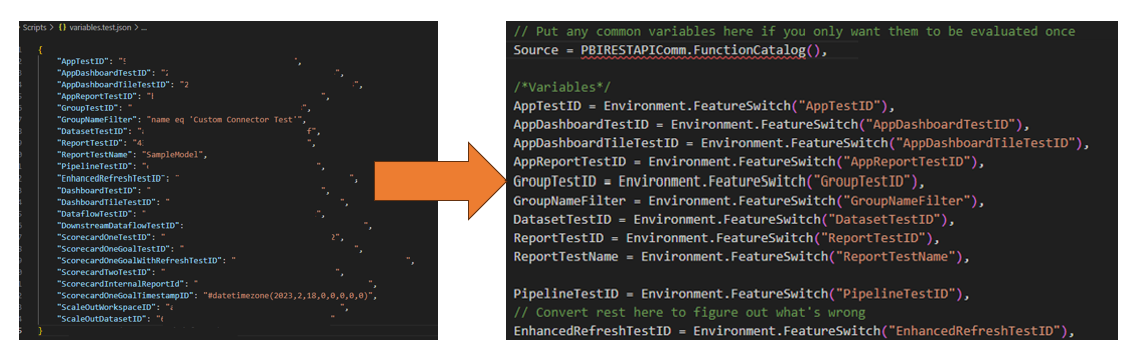

- Decoupling the test environment from testing – Leveraging the PQTest.exe test command, you can provide an environment configuration file as a JSON file with properties and values. I just needed to tweak the testing file (thanks to the template provided by Microsoft) to reference these properties instead of a hard-coded value (Figure 2). This setup enables others to build their test configuration and run the tests in their own environment.

Figure 2 - Separate JSON file stores test variables and the feed the testing scripts.

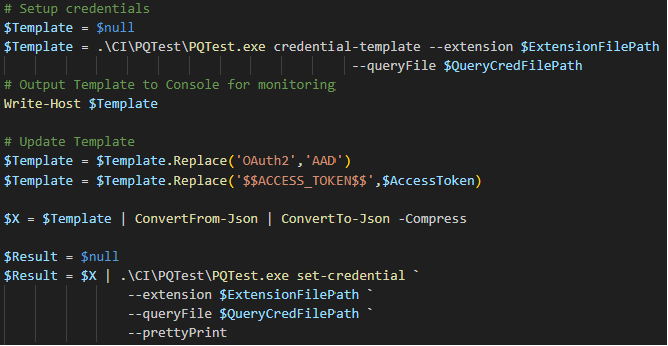

- Automating Authentication - This way, by far, the most challenging aspect and the least documented. It turns out that by using the generate-credential command and providing your compiled connector and test file, you can obtain the appropriate JSON file. Then you need to update the JSON file and add it as an argument when executing the test command. However, when you do attempt this process with a file that uses Environment Configuration, the generate-template does not like it and says you have an error. As a workaround, I provided the generate-template with a basic test file to ensure the custom connector loads successfully. This approach yielded a template file. After some trial and error, I figured out how to replace the template with the credentials in the format required to make the incantation work (Figure 3).

Figure 3 - Example of how to generate, change, and set the credentials with PQTest.exe.

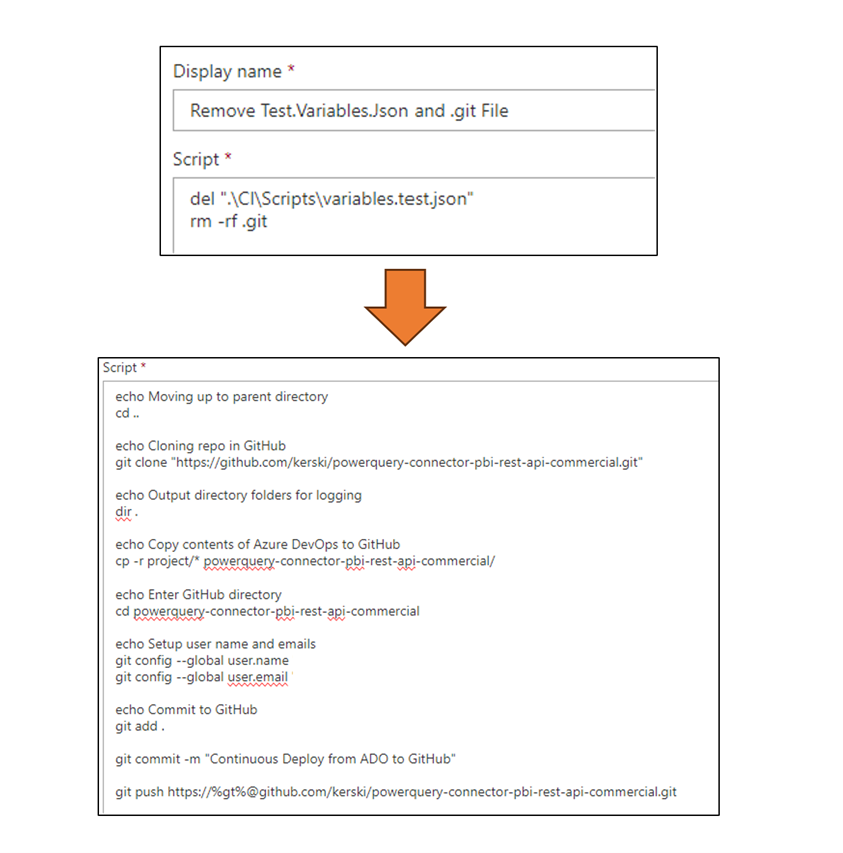

- Deployments – Thanks to Azure DevOps release pipelines and a few stack overflow searches (ChatGPT was not too helpful this time ), I created a two-step release process. As shown in Figure 4, it first removes the variables.test.json file so my personal test settings for my tenant do not go public. Second, it pushes the code to the main branch of the public repository using git command lines. Note that with the git push, there is a %gt% reference. That is the Personal Access Token I had to set up in GitHub to authenticate with the public repository.

Figure 4 - Steps to release from the internal repo to the public repo.

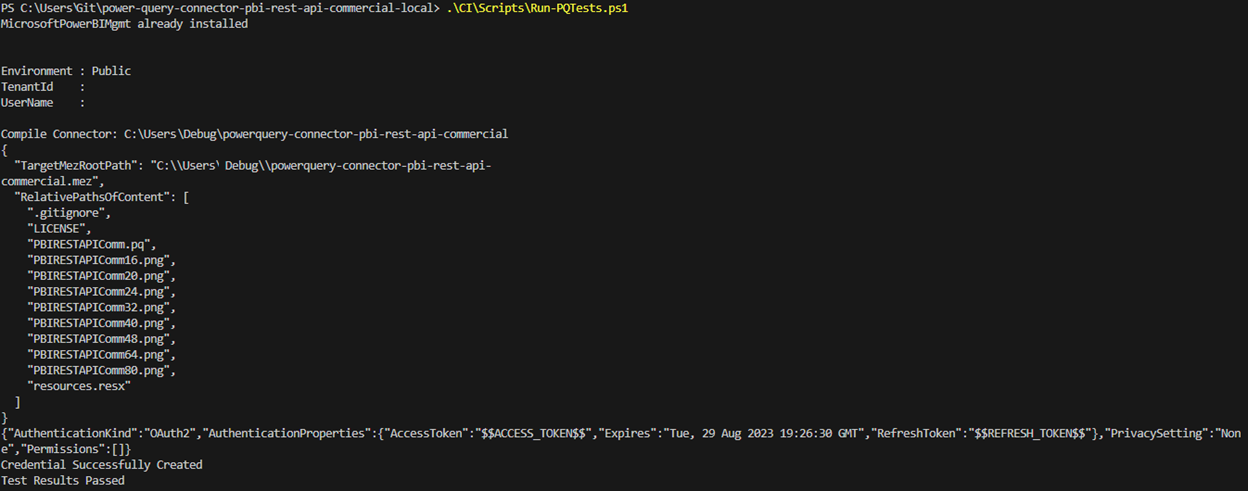

- Standardize Testing - To make sure the tests I run locally would operate similarly, I built a PowerShell script Run-PQTests.ps1 that performs the following:

- Identify (through an environment variable) whether the script is running locally or running in a build agent

- Connect to the Power BI Service

- Compile the custom connector with MakePQX.exe

- Generate the Credential template with PQTest.exe

- Set the Credential with PQTest.exe

- Run the Tests with PQTest.exe

- Check the results and fail the build if the tests fail

Figure 5 demonstrates running the script locally.

Figure 5 - Example of running tests locally.

If you’re interested in diving further into the code, please check out my repository for the Power Query Custom Data Connector for Power BI REST APIs (Commercial) and explore the “CI” subfolder to see the scripts, folder structure, and YAML files. Over the next year, I’ll incorporate more continuous integration and continuous deployment (CI/CD) into custom connectors I build, and I hope this helps you on that journey too. Don’t assume the custom connectors will continue to work, especially if they depend on APIs—automate testing.

I’d like to hear your thoughts, so please let me know what you think on LinkedIn or Twitter.

Finally, if you’re going to be at the SQL Saturday Denver, September 23rd, 2023, I’ll be presenting on custom connectors. I hope to see you there!

This article was edited by my colleague and senior technical writer, Kiley Williams Garrett.